(A proposition for Clément Vidal‘s starivore competition: http://www.allourideas.org/highenergyastrobiology :Sent to Clément Vidal 4/10/2015 2:14 PM)

CONJECTURE: Are Gamma Ray Bursts part of the life cycle of stellar-spanning prokaryote colony organisms?

This idea combines the observation of microwaved H20 nucleation, gamma ray bursts (GRBs), nuclear fusion fizzles, Clément Vidal’s Starivore hypotheses, Paul Stamets’ mycology work, and the report that radiotrophic fungi appear to convert gamma radiation into chemical energy for food and growth.

BACKGROUND

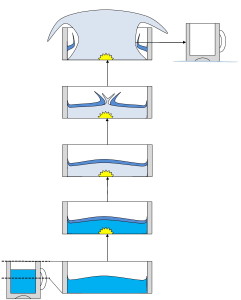

Observation of superheated H20 nucleation

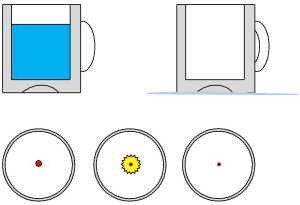

Shortly after reading an article about Gamma Ray Bursts (GRB) in Science News, circa early-1990s, I noticed an interesting parallel between the GRB description and an event that happened at home a few weeks before. I had heated a mug of water in a microwave oven, and did not immediately retrieve it when the oven alarm went off. But went to get it sometime later. The water had cooled, so I nuked it again. There

Shortly after reading an article about Gamma Ray Bursts (GRB) in Science News, circa early-1990s, I noticed an interesting parallel between the GRB description and an event that happened at home a few weeks before. I had heated a mug of water in a microwave oven, and did not immediately retrieve it when the oven alarm went off. But went to get it sometime later. The water had cooled, so I nuked it again. There

was an explosive burst inside the chamber, and I discovered the water had exploded out of the mug. Most had flashed to steam and the remainder was on the oven floor.

My theory was that (a) the water had cooled and the surface had cooled most of all, so that the meniscus formed into impermeable membrane for the life of the 2nd heating event; and (b) When the microwave energy superheated the watercore, the water, expanding as a gas, expanded against the cooler underside of the surface membrane, forcing it to pop at a weak point in the membrane…just like a balloon filled with too much air.

And have wondered ever since whether GRBs might be the artefact of a similar process? I have since discovered that water can superheat “when heated in a microwave oven in a container with a smooth surface. That is, the liquid reaches a temperature slightly above its normal boiling point without bubbles of vapour forming inside the liquid. The boiling process can start explosively when the liquid is disturbed…This can result in spontaneous boiling (nucleation) which may be violent enough to eject the boiling liquid.”[note 1]

Here is the Conjecture

The parallel between Microwave Oven Ejecta and Gamma Ray Burst ejecta led me to thinking about the container.

The parallel between Microwave Oven Ejecta and Gamma Ray Burst ejecta led me to thinking about the container.

Is there a parallel between Coffee Mug containers and Solar System containers?

Is a solar system going Nova or Supernova going to act like a superheating liquid in a coffee mug?

Could we reverse-think what Gamma Ray Bursts (GRB) signal?

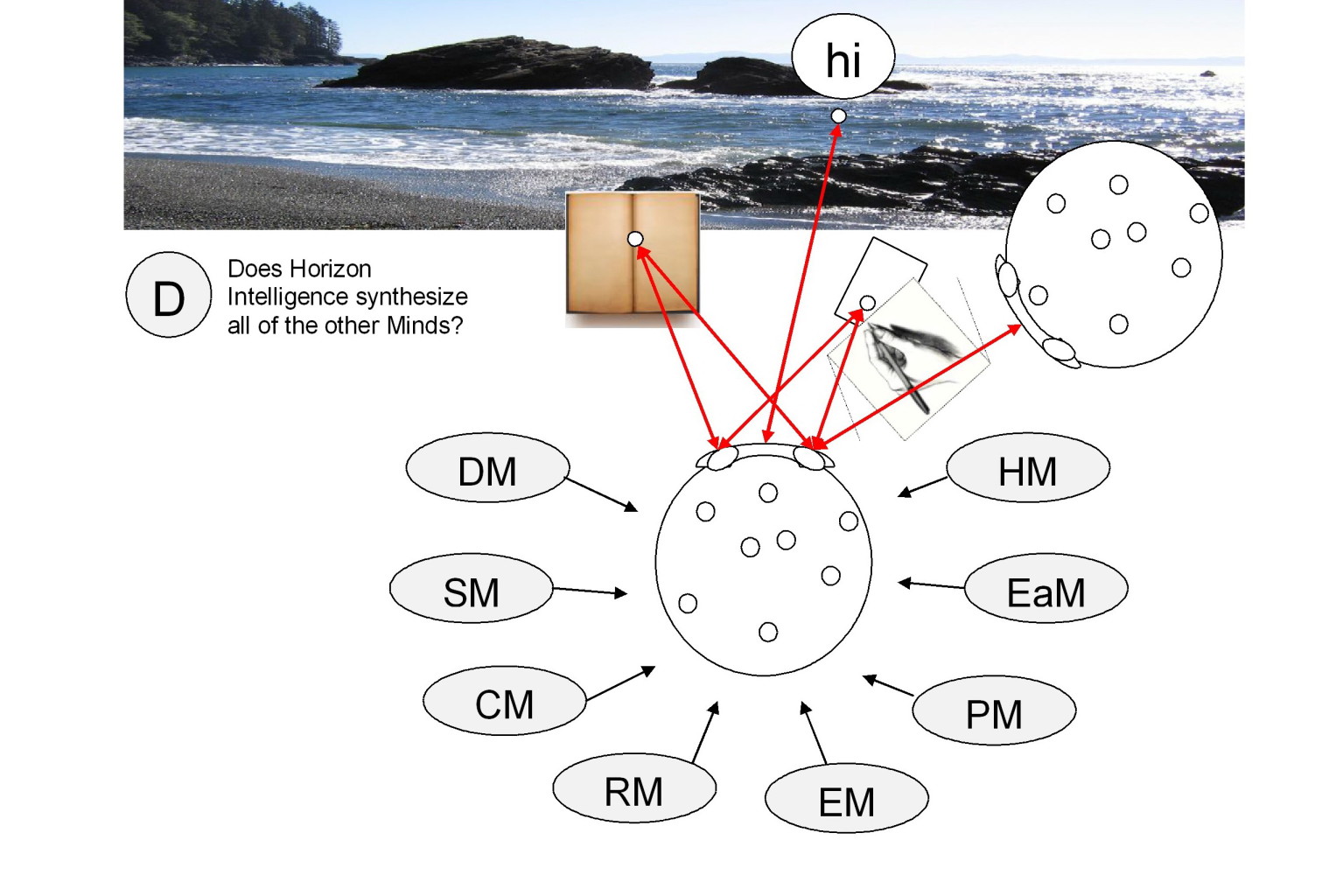

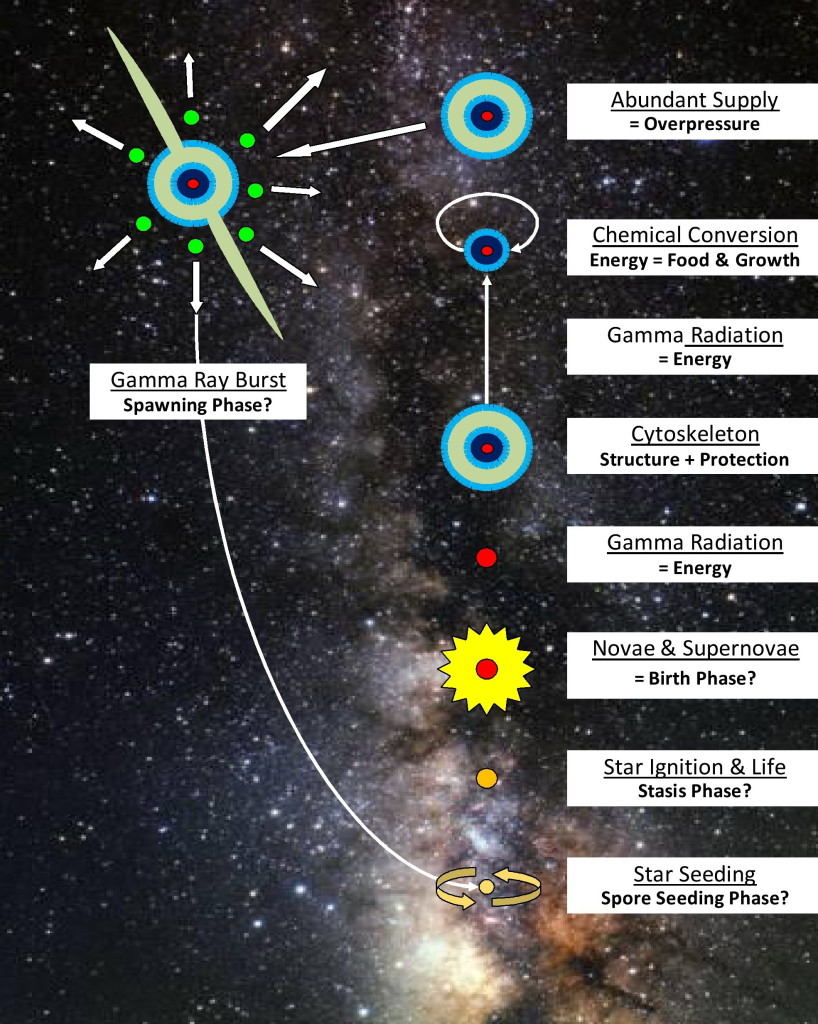

Are GRBs part of a Starivore species’ life cycle?

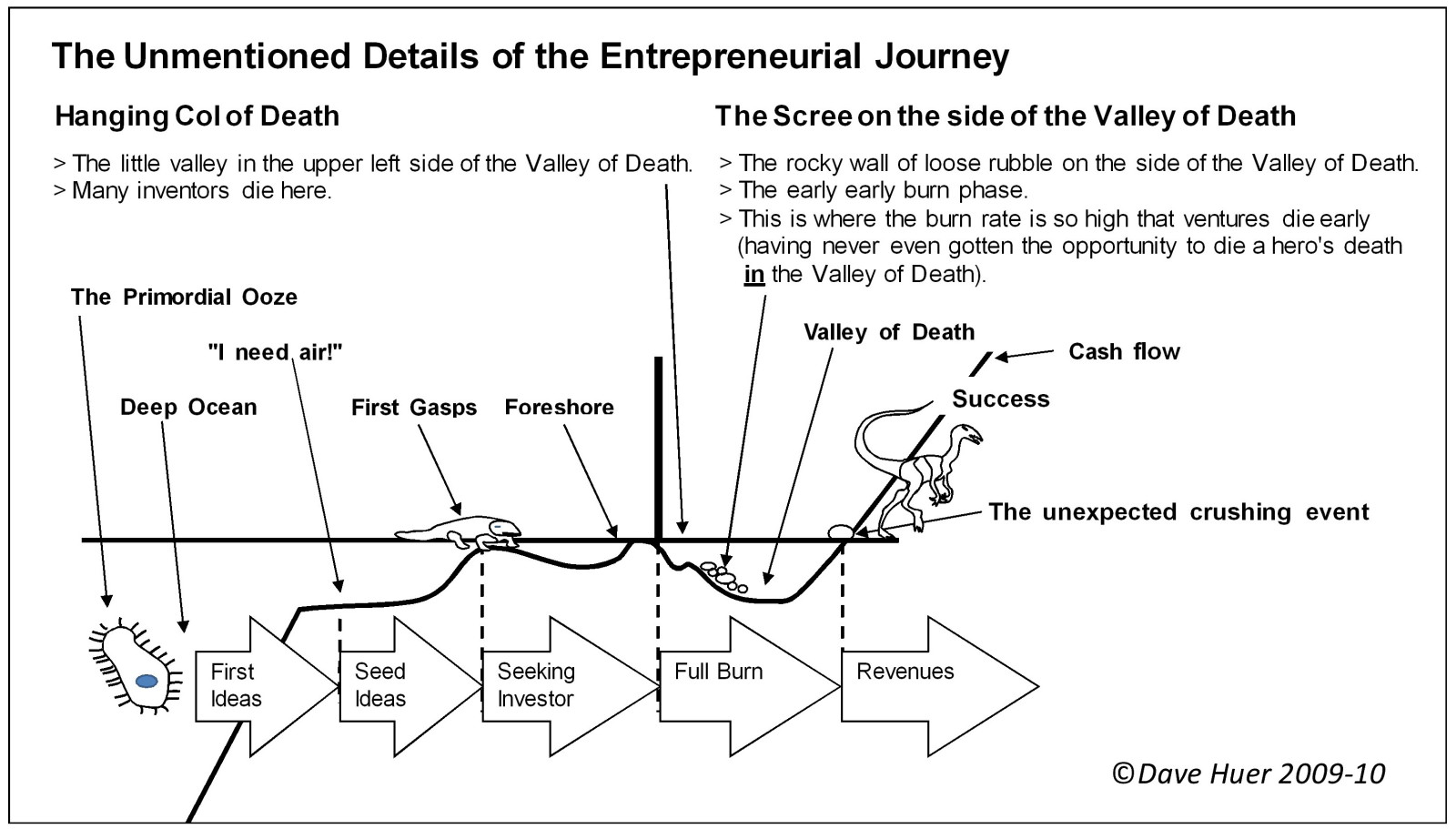

Reverse-Engineering GRBs

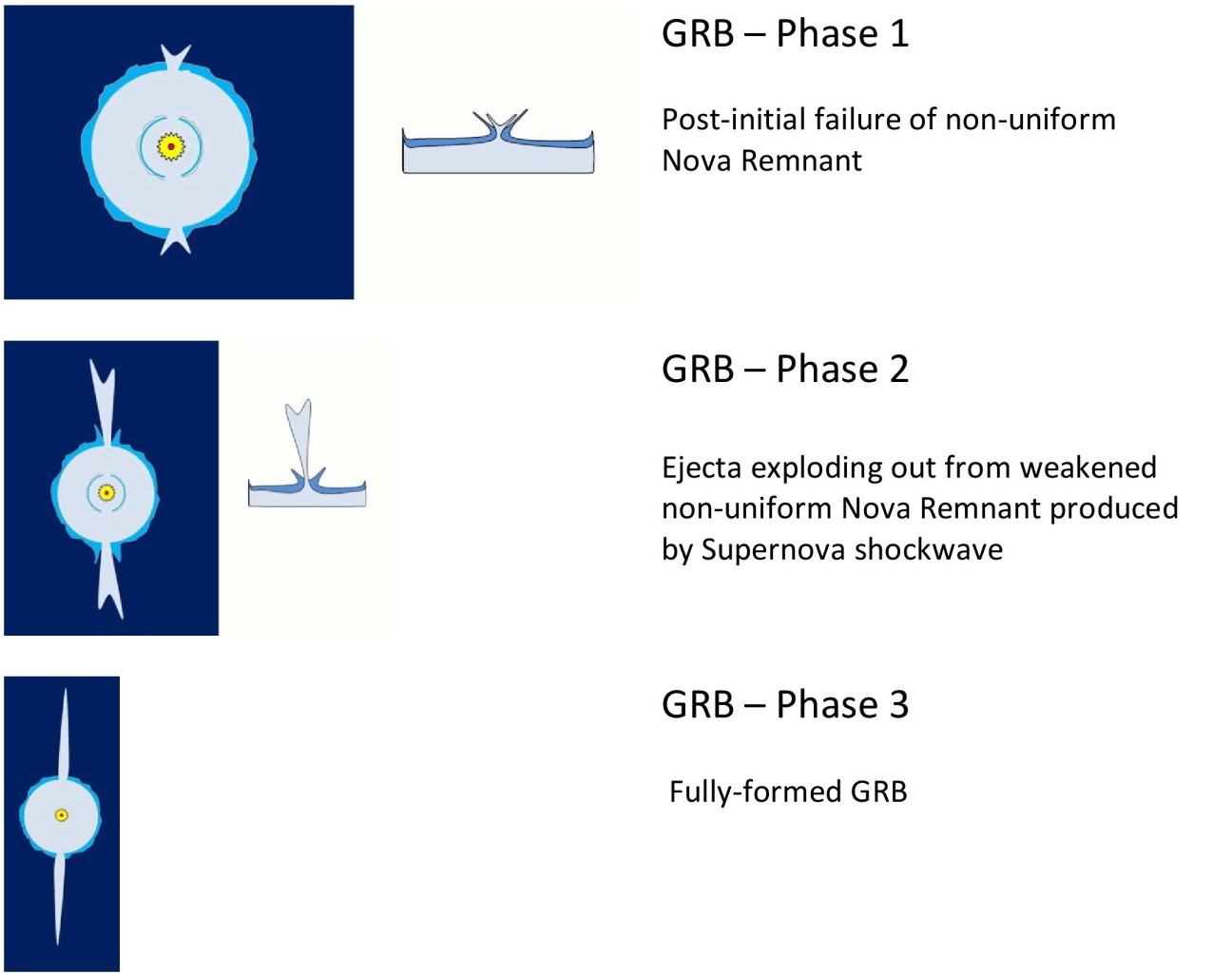

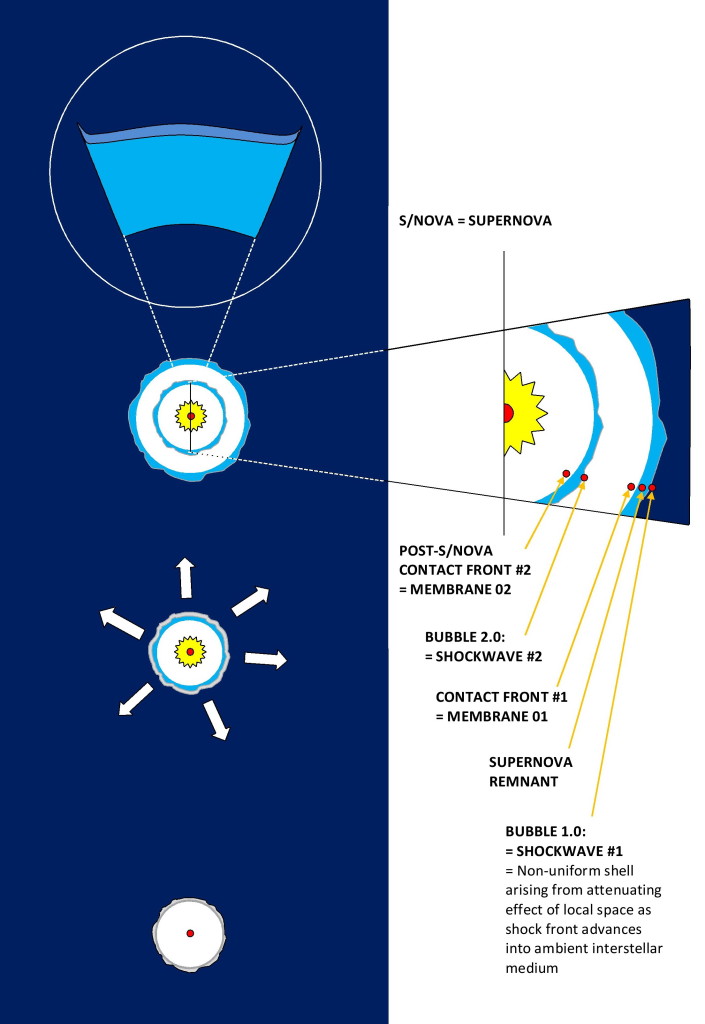

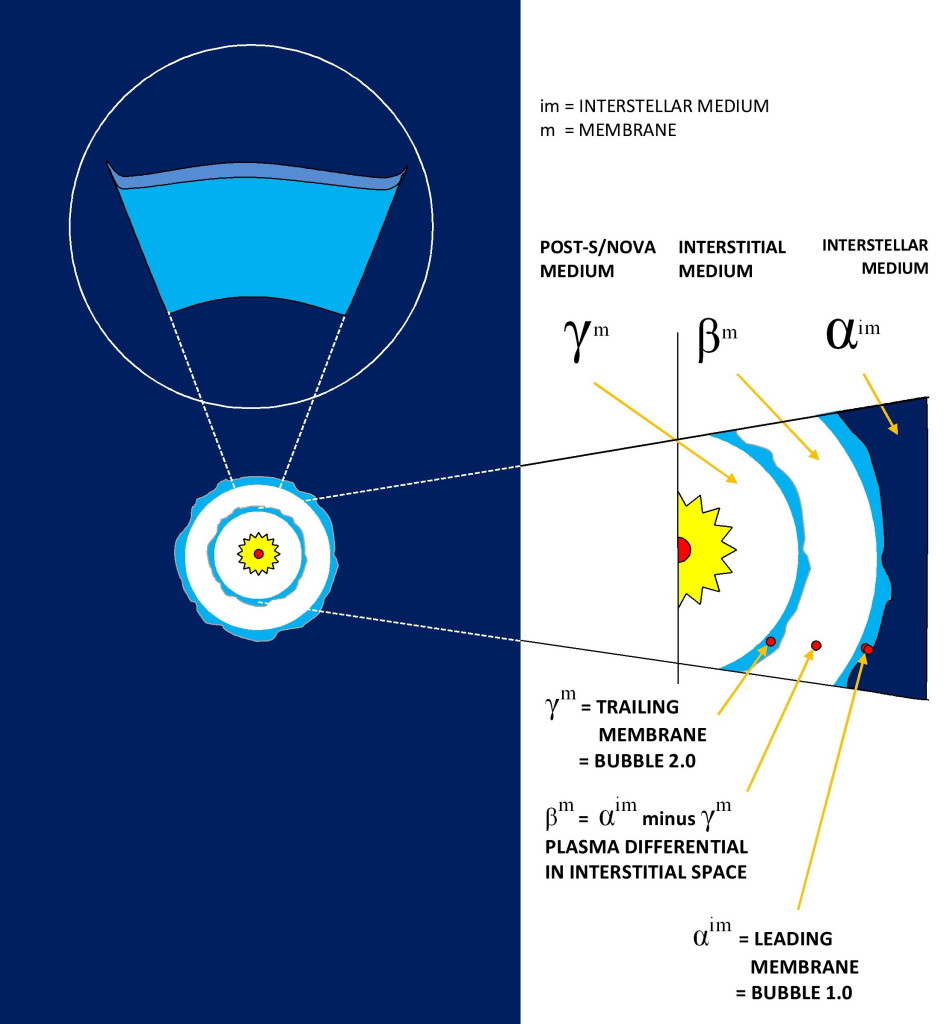

A supernova briefly outshines an entire galaxy, “radiating as much energy as the Sun or any ordinary star is expected to emit over its entire life span, before fading from view over several weeks or months. The extremely luminous burst of radiation expels much or all of a star’s material at a velocity of up to 30,000 km/s (10% of the speed of light), driving a shock wave into the surrounding interstellar medium. This shock wave sweeps up an expanding shell of gas and dust called a supernova remnant” [note 2]. Thinking about GRBs led me to wonder whether advancing shock fronts are similar to a meniscus membrane?

And, whether the advancing supernova shock front, pressing against the material of the solar system and the interstellar medium, is similar to the superheated H20 gas advancing against an H20 membrane?

Could it be that the event that we see, the GRB, is over-pressuring during an ending stage of the explosive sequence?

Are GRBs evidence of a weakened section of the advancing contact front?

How do non-uniform characteristics of the solar system and interstellar medium, being mixed by the shock wave and contact front, affect the GRB event?

Is the Advancing Shock Front the pre-GRB membrane?

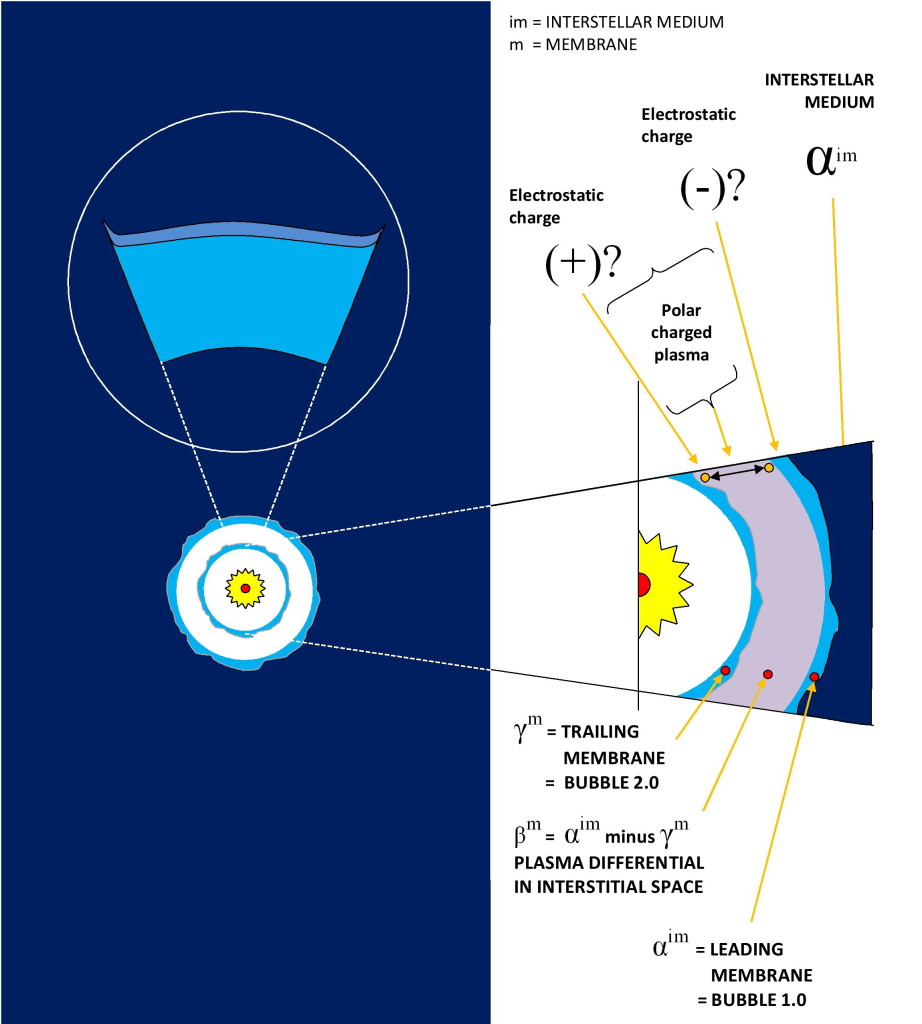

Stellar explosions are not perfectly expansive. There will be protuberances and deflections of the outgassing shock front envelope; and concurrent density variances and electromagnetic eddies in interstitial space behind the advancing shock front and secondary turbulence behind the shock front.

Stellar explosions are not perfectly expansive. There will be protuberances and deflections of the outgassing shock front envelope; and concurrent density variances and electromagnetic eddies in interstitial space behind the advancing shock front and secondary turbulence behind the shock front.

What occurs in Interstitial Space behind the shock front? And, what happens if the reaction is a fizzle (ie. a Nova) [note 3]? Do fizzles produce two shock fronts, with polar charged membranes?

For testing:

Are there polar charge spreads in the GRB ejecta and between ejecta funnel pairs?

If there is differing charge intensity, could this be an indicator of an archaea-habitable “goldilocks” field?

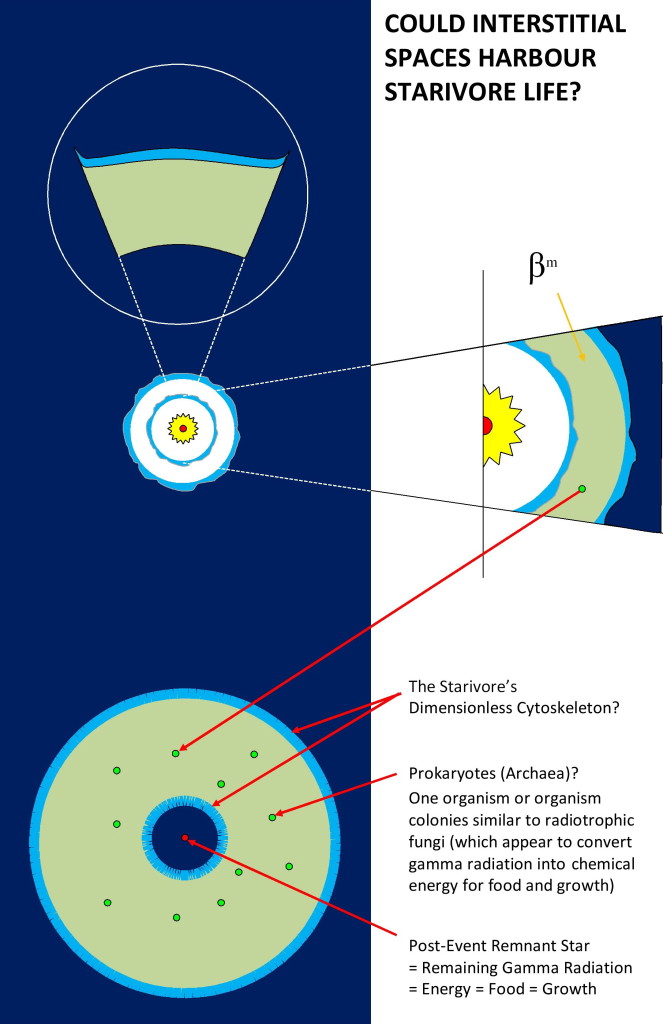

And, could Interstitial Space harbour Archaea colony organism nurseries?

Could colonies exist, feeding on an ocean of radiation; similar to radiotrophic fungi that do the same on Earth [note 4]?

Could the pressure of their growth produce an expansion that is overwhelming to the containing membrane? Does overwhelming expansion produce the GRB event?

Could the pressure of their growth produce an expansion that is overwhelming to the containing membrane? Does overwhelming expansion produce the GRB event?

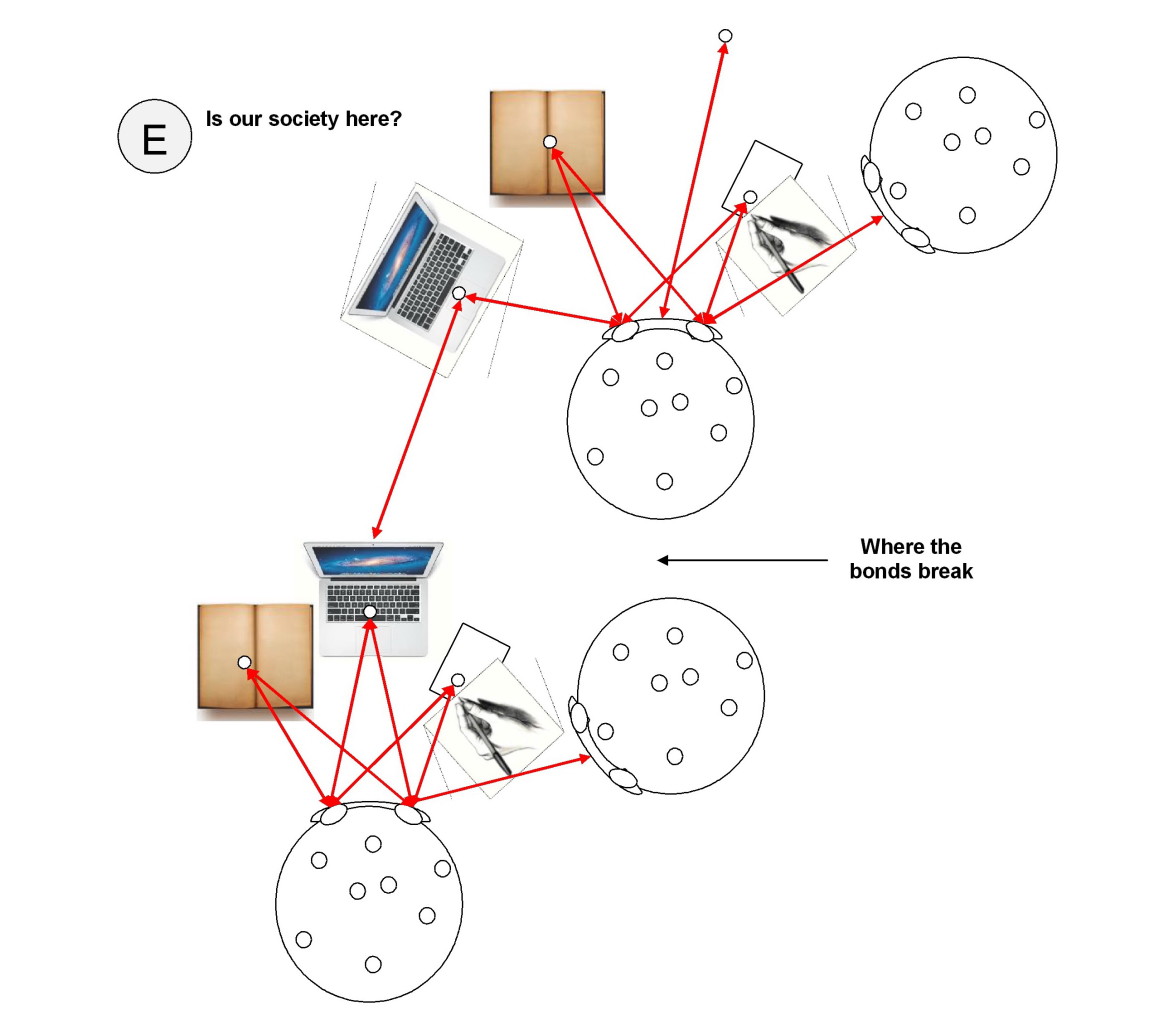

And, imagining the truly large, could expansion of the observable / known universe be the result of a Creation (with a capital “C”)-sized GRB event?

Is our universe merely one truly big organism—the Big-Bangivore (“Bangivore” for short [note 5]) —among a shoaling school of Bangivores spawning progeny through an expanse we cannot neatly comprehend?

Could GRBs (and the Big Bang cosmological model) point to a spawning phase in the life cycle of prokaryote Starivores and Bangivores?

Notes

(1) https://en.wikipedia.org/wiki/Microwave_oven

(2 ) https://en.wikipedia.org/wiki/Shock_waves_in_astrophysics

(3) The “fizzles” component came from learning about the fusion sequence whilst doing a nuclear fusion paper. I’d gone to adult high school to brush up on math/sci/machine shop before going to industrial design college. We wrote papers on a physics subject, mine was on the fusion equations sequence, and the instructor (PhD physics) informed me he had to lock it in the school safe (!). At human scale, fizzles occur when the intended yield of a nuclear device fails to meet the designed (expected) explosive yield. https://en.wikipedia.org/wiki/Fizzle_%28nuclear_test%29

(4) https://en.wikipedia.org/wiki/Archaea and https://en.wikipedia.org/wiki/Radiotrophic_fungus

(5) There will be differently-scaled Bangivori, and thence a taxonomy of Lesser Bangivores, Big Bangivores, and Greater Bangivores. If they all spawn from the same yield event then of course at least one type will produce greater bang for the buck.